It seems we can’t find what you’re looking for. Perhaps searching can help.

About the Workshop

This workshop will bring together researchers in academia and industry who have an interest in making smart systems explainable to users and therefore more intelligible and transparent.

Smart systems that apply complex reasoning to make decisions and plan behavior, such as clinical decision support systems, personalized recommendations, and machine learning classifiers, are difficult for users to understand. While research to make systems more explainable and therefore more intelligible and transparent is gaining pace, there are numerous issues and problems regarding these systems that demand further attention. The goal of this workshop is to bring researchers and industry together to address these issues, such as when and how to provide an explanation to a user. The workshop will include a keynote, poster panels, and group activities, with the goal of developing concrete approaches to handling challenges related to the design and development of explainable smart systems.

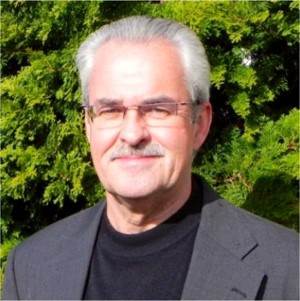

Keynote Speaker: David Gunning (DARPA)

David Gunning is DARPA program manager in the Information Innovation Office (I2O). Dave has an extensive background in the development and application of artificial intelligence (AI) technology. At DARPA, Dave now manages the Explainable AI (XAI) and the Communicating with Computers (CwC) programs. Dave comes to DARPA as an IPA from Pacific Research National Lab (PNNL). Prior to PNNL, Dave was a Program Director for Data Analytics and Contextual Intelligence at the Palo Alto Research Center (PARC), a Senior Research Manager at Vulcan Inc., a Program Manager at DARPA (twice before), SVP of SET Corp., VP of Cycorp, and a Senior Scientist in the Air Force Research Labs. At DARPA previously, Dave managed the Personalized Assistant that Learns (PAL) project that produced Siri and the Command Post of the Future (CPoF) project that was adopted by the US Army as their Command and Control system for use in Iraq and Afghanistan. Dave holds a M.S. in Computer Science from Stanford University, a M.S. in Cognitive Psychology from the University of Dayton, and a B.S. in Psychology from Otterbein College.

David Gunning is DARPA program manager in the Information Innovation Office (I2O). Dave has an extensive background in the development and application of artificial intelligence (AI) technology. At DARPA, Dave now manages the Explainable AI (XAI) and the Communicating with Computers (CwC) programs. Dave comes to DARPA as an IPA from Pacific Research National Lab (PNNL). Prior to PNNL, Dave was a Program Director for Data Analytics and Contextual Intelligence at the Palo Alto Research Center (PARC), a Senior Research Manager at Vulcan Inc., a Program Manager at DARPA (twice before), SVP of SET Corp., VP of Cycorp, and a Senior Scientist in the Air Force Research Labs. At DARPA previously, Dave managed the Personalized Assistant that Learns (PAL) project that produced Siri and the Command Post of the Future (CPoF) project that was adopted by the US Army as their Command and Control system for use in Iraq and Afghanistan. Dave holds a M.S. in Computer Science from Stanford University, a M.S. in Cognitive Psychology from the University of Dayton, and a B.S. in Psychology from Otterbein College.

DARPA’s Explainable Artificial Intelligence

You may download the slides of the keynote.

Dramatic success in machine learning has led to a torrent of Artificial Intelligence (AI) applications. Continued advances promise to produce autonomous systems that will perceive, learn, decide, and act on their own. However, the effectiveness of these systems is limited by the machine’s current inability to explain their decisions and actions to human users. The Department of Defense is facing challenges that demand more intelligent, autonomous, and symbiotic systems. Explainable AI—especially explainable machine learning—will be essential if future warfighters are to understand, appropriately trust, and effectively manage an emerging generation of artificially intelligent machine partners.

XAI is developing new machine-learning systems with the ability to explain their rationale, characterize their strengths and weaknesses, and convey an understanding of how they will behave in the future. The strategy for achieving that goal is to develop new or modified machine-learning techniques that will produce more explainable models. These models will be combined with state-of-the-art human-computer interface techniques capable of translating models into understandable and useful explanation dialogues for the end user. Our strategy is to pursue a variety of techniques in order to generate a portfolio of methods that will provide future developers with a range of design options covering the performance-versus-explainability trade space.

XAI research prototypes will be tested and continually evaluated throughout the course of the program. At the end of the program, the final delivery will be a toolkit library consisting of machine learning and human-computer interface software modules that could be used to develop future explainable AI systems. After the program is complete, these toolkits would be available for further refinement and transition into defense or commercial applications.

Schedule

| Time | Activity |

|---|---|

| 09:00-09:15 | Welcome address |

| 09:15-10:00 | Keynote talk by Dave Gunning – “What are the current challenges for explainable smart systems?” |

| 10.00-10.45 | Themed poster panels (all accepted papers should prepare a poster providing an overview of their paper which you have 3 minutes to present; poster size not to exceed A1 format, poster size roughly 594 x 841 mm or 23.4 x 33.1 inches),

|

| 10:45-11:00 | Coffee Break |

| 11:00-11.30 | Introduction of activities, sub-group assignment (3-4 groups; no more than 6/group) and example systems |

| 11:30-12:15 | Sub-group activity – Decide which system to focus on, explore concrete challenges for the system of focus which the group is concentrating on |

| 12:15-12:30 | Presentation of sub-group activity |

| 12:30-13:30 | Lunch |

| 13:30-15:00 | Sub-group activity – “designing” concrete approaches for the challenges identified for the system of focus |

| 15:00-15:15 | Coffee Break |

| 15:15-16:15 | Presentation of sub-group activity and “design crit” |

| 16:15-16:45 | Sub-group activity – Write-up and planning next steps |

| 16:45-17:00 | Workshop wrap-up |

Papers

You may download the all accepted position papers for this workshop here.

- Ajay Chander, Ramya Srinivasan, Suhas Chelian, Jun Wang and Kanji Uchino. Working with Beliefs: AI Transparency in the Enterprise

- Jonathan Dodge, Sean Penney, Andrew Anderson and Margaret Burnett. What Should Be in an XAI Explanation? What IFT Reveals

- Tim Donkers, Benedikt Loepp and Jürgen Ziegler. Explaining Recommendations by Means of User Reviews

- Malin Eiband, Hanna Schneider and Daniel Buschek. Normative vs. Pragmatic: Two Perspectives on the Design of Explanations in Intelligent Systems

- Melinda Gervasio, Karen Myers, Eric Yeh and Boone Adkins. Explanation to Avert Surprise

- Karen Guo, Danielle Pratt, Angus MacDonald Iii and Paul Schrater. Labeling images by interpretation from Natural Viewing

- Tony Leclercq, Maxime Cordy, Bruno Dumas and Patrick Heymans. Representing Repairs in Configuration Interfaces: A Look at Industrial Practices

- O-Joun Lee and Jason J. Jung. Explainable Movie Recommendation Systems by using Story-based Similarity

- Brian Y. Lim, Danding Wang, Tze Ping Loh and Kee Yuan Ngiam. Interpreting Intelligibility under Uncertain Data Imputation

- Jeremy Ludwig, Annaka Kalton and Richard Stottler. Explaining Complex Scheduling Decisions

- Michael Pazzani, Amir Feghahati, Christian Shelton and Aaron Seitz. Explaining Contrasting Categories

- Jakub Sliwinski, Martin Strobel and Yair Zick. An Axiomatic Approach to Linear Explanations in Data Classification

- Alison Smith and Jim Nolan. The Problem of Explanations without user Feedback

- Simone Stumpf, Simonas Skrebe, Graeme Aymer and Julie Hobson. Explaining smart heating systems to discourage fiddling with optimized behavior

- Jasper van der Waa, Jurriaan van Diggelen and Mark Neerincx. The design and validation of an intuitive confidence measure